Generative artificial intelligence (AI) systems have become outstandingly capable in a relatively short time, but they're not infallible. AI chatbots and text generators can be pretty unpredictable, especially until you learn how to prompt effectively.

If you leave AI models with too much freedom, they might provide inaccurate or even contradicting information. This guide will explain why this happens and how to avoid this issue to ensure your AI tool generates trustworthy content.

What you will learn

- What AI hallucination is and why it happens

- How hallucinations impact your content

- What AI hallucination looks like in practice

- How to stop your AI model from generating inaccurate information

What is AI hallucination?

AI hallucination is a phenomenon that makes LLMs (Large Language Models) generate inaccurate information and responses.

These inaccuracies can range from mild deviations from facts to completely false or made-up information.

This issue is quite common—so much so that ChatGPT, the best-known generative AI system, has a disclaimer warning users about "inaccurate information about people, places, or facts."

AI models like ChatGPT are trained to predict the most plausible next word of a response based on the user's query (also known as a prompt). As the model isn't capable of independent reasoning, these predictions aren't always accurate.

That's why, by the end of the generation process, you may end up with a response that steers away from facts.

These deviations aren't always obvious, mainly because a language model can produce highly fluent and coherent text that makes it seem like you shouldn't doubt the response.

This is why fact-checking an AI model's output is crucial to ensuring your content doesn't contain false information.

What causes AI hallucination?

AI hallucination is caused by improper, low-quality training data. The output of a generative AI model directly reflects the datasets it was trained on, so if there are any gaps that leave room for the so-called "edge cases," the model might not give an accurate response.

A good example of such an issue is overfitting, which happens when an AI model is too accustomed to a dataset used for its training.

When this happens, the model is inapplicable to other datasets, so forcing it to create a response based on newly introduced data can lead to false information.

If this concept sounds too complex, here's a simplified example that clarifies it:

Let's say you asked an AI tool to draft a commercial real estate purchase agreement. If the tool was trained on residential real estate data and overfitted, it may not have had enough exposure to commercial agreements to understand the differences between them.

It would still generate a draft because you prompted it, but it may leave out important sections specific to commercial agreements or even make them up.

Language-related challenges can also contribute to hallucinations. AI must stay up-to-date on the constant evolution of language to avoid misinterpretations caused by new terminology, slang expressions, and idioms.

For best results, it's always best to use clear, plain language when prompting an AI tool.

Why is AI hallucination a problem?

AI hallucination isn't merely an error in computer code—it has real-life implications that can expose your brand to significant dangers.

The main consequence you might suffer is the deterioration of consumer trust as a result of putting out false information. Your reputation might take a hit, which may require a lot of time to fix.

Another inconvenience caused by AI hallucinations is prolonged research.

If your AI tool keeps responding with inaccurate information, you can't confidently publish a piece before fact-checking everything.

In some cases, this can take longer than it would to do your own research manually.

The dangers of AI hallucinations are particularly visible in YMYL (Your Money, Your Life) topics.

Google looks for the highest possible degree of E-E-A-T (Experience, Expertise, Authoritativeness, and Trust) in order to rank such pieces high in search results, so any inaccuracies can damage your SEO standing.

Worse yet, hallucinations may lead to your AI tool generating content that negatively impacts the reader's well-being.

All of this doesn't mean you should steer away from AI when creating content—all you need to do is mitigate hallucinations to ensure your AI tool provides accurate, reliable information.

8 ways to prevent AI hallucinations

While you may not have complete control over your AI tool's output, there are many ways to minimize the risk of it making up information. Here are some of the most effective steps to prevent AI hallucinations:

1. Provide relevant information

AI models require proper context to yield accurate results. Without it, the output is quite unpredictable and most likely won't meet your specific expectations. You need to explain to AI what you're looking for and give it a bigger picture of your content.

It's also a good idea to direct your prompt with specific data and sources.

This way, your AI model will know exactly where to pull its information from, which reduces the risk of hallucinations.

So what does this look like in practice?

It all comes down to avoiding vague prompts and giving AI as many specifics as possible.

For example, instead of saying,

"Write an introduction to an article about the digital marketing industry," your prompt can be something like:

"Write a 150–200-word introduction to an article about the state of the digital marketing industry. The article will be published on an SEO blog, and the tone should be friendly and authoritative. Use official .gov sources to provide relevant statistics about the industry's current state and predictions."

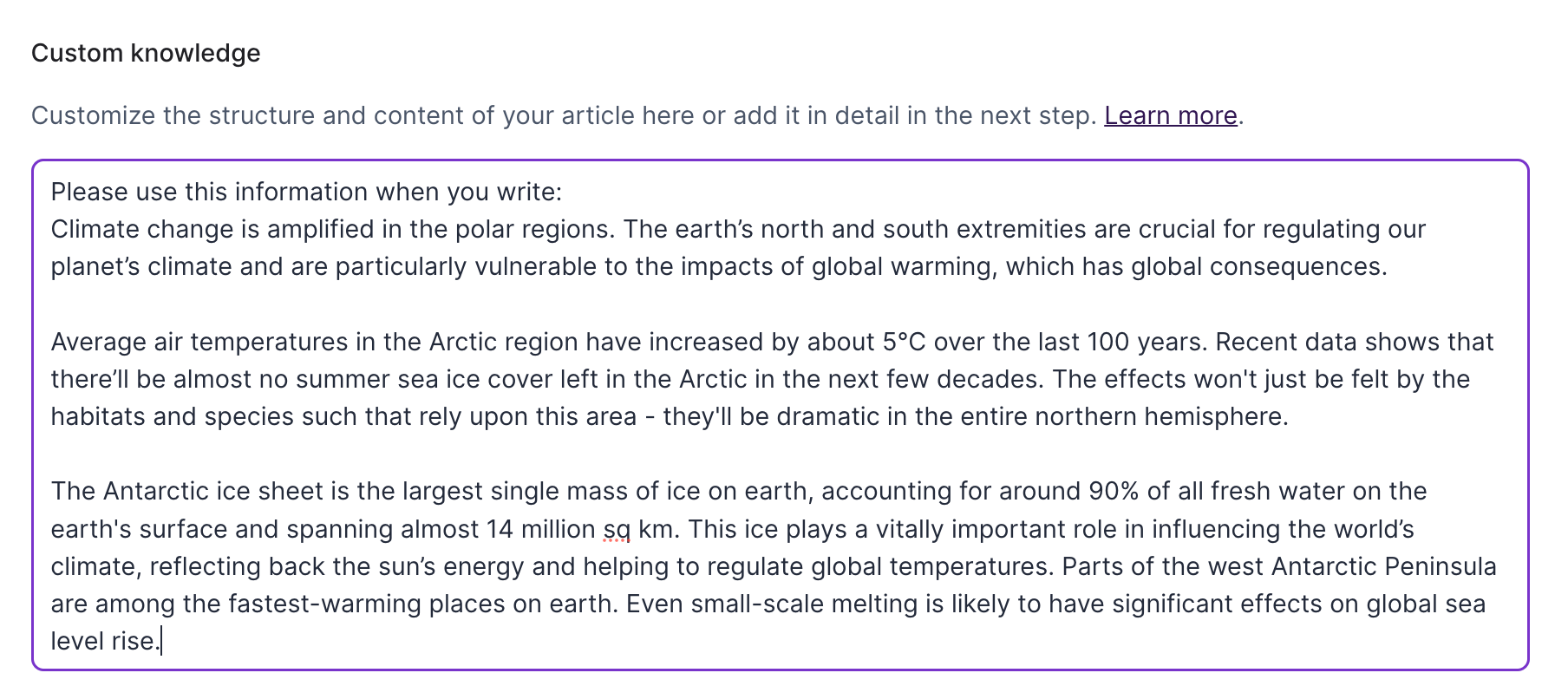

Surfer AI, for example allows you to add specific information using its custom knowledge feature. Let's say you are writing an article on fighting climate change.

You can instruct Surfer to include information that details the impact of climate change in the polar region.

By specifying the length, tone, sources, and use of the content you want your AI tool to write, you'll give it enough direction to ensure accuracy while reducing the need for heavy rewrites.

2. Limit possible mistakes

Besides giving AI a clear direction, you should set some boundaries within which you want the response to be.

Ambiguous questions may be misunderstood and increase the chance of hallucinations.

One way to help your AI tool provide a correct answer is to ask limited-choice questions instead of open-ended ones.

Here are some examples that clarify this difference:

Open-ended: How has unemployment changed in recent times?

Limited-choice: What were the unemployment rates in 2021 and 2022 according to government data?

Open-ended: How much content should my website have?

Limited-choice: How many blog posts does an average business publish per month?

Open-ended: How do testimonials impact sales?

Limited-choice: Are testimonials more trustworthy than ads? Include recent research to support your answer.

The point is to ensure AI looks for specific data instead of having the liberty to come up with the answer on its own.

It's also important to instruct AI to admit when it can't find reputable sources to back up its claims.

ChatGPT does this by default for some prompts, but it's a good idea to explicitly mention it to stay on the safe side.

3. Include data sources

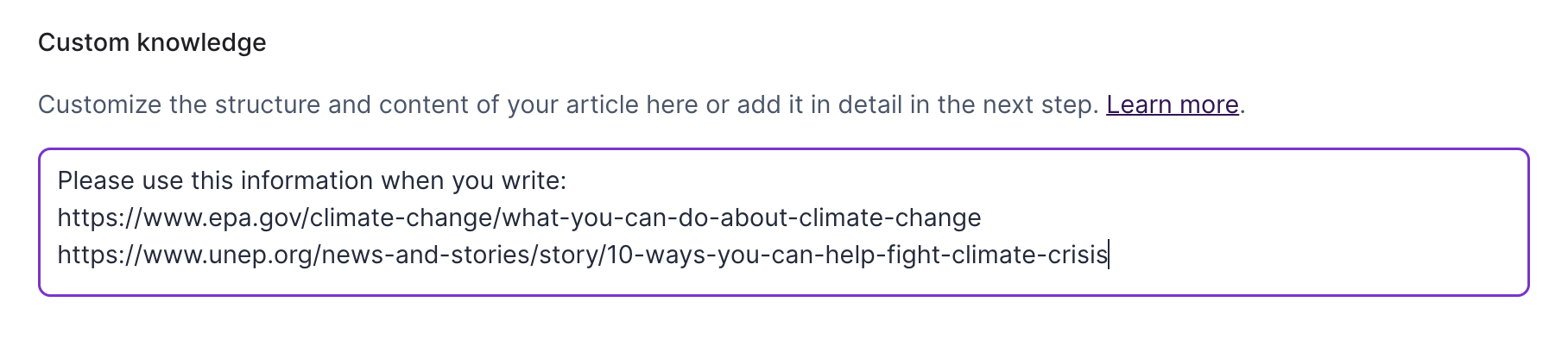

If you don't want your AI model to steer away from facts, you can tell it where to look for information.

Some of the example prompts you saw above do this by instructing AI to look for reputable research, but you can take it a step further and give your platform the specific websites you want it to use.

For instance, in the above unemployment rate example, you can tell AI to only use data from the U.S. Bureau of Labor Statistics.

This way, you'll know the answer came from a reputable source while still saving yourself the time you'd spend looking for these statistics manually.

Make sure to use verified sources instead of letting AI pluck information from any random site on the web, and you should significantly reduce the chances of hallucinations.

You can do this by including relevant websites that you'd like researched for your AI article.

Including pages from the US Environment Protection Agency and United Nations Environment Program are probably good data sources.

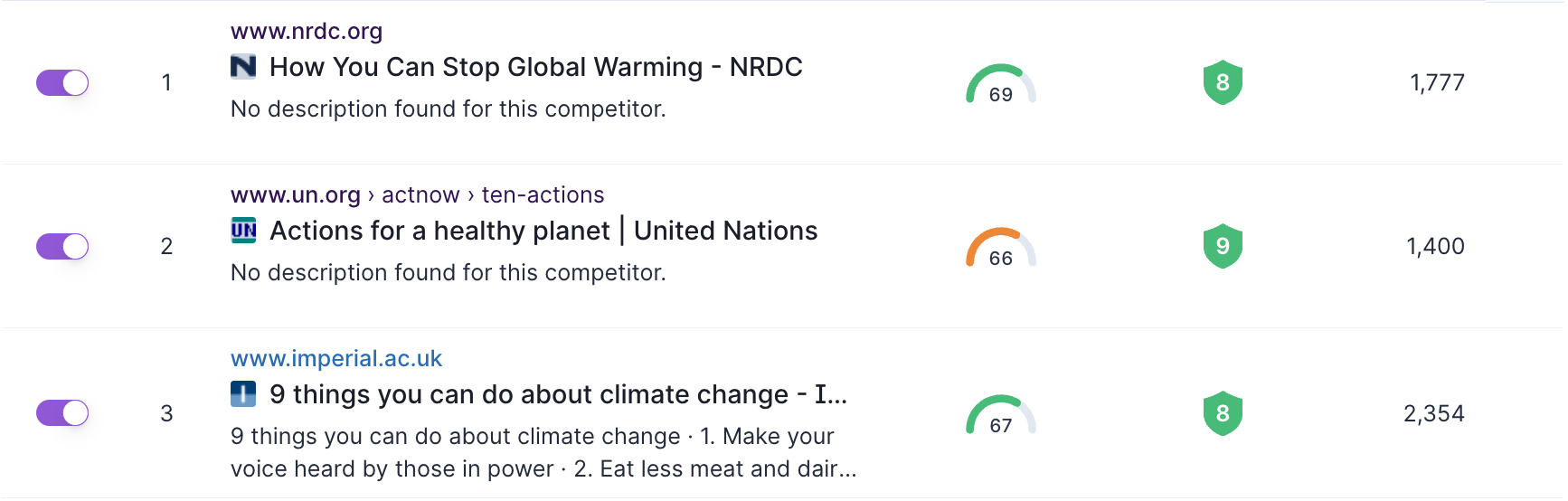

While the organic competitors feature is mainly reserved to analyze competitors, it can have a desired effect for research and data sources.

Just be sure to select pages that are high quality and relevant, and Surfer AI will use these as research material before generating your article.

4. Assign a role

Role designation is a useful prompting technique that gives AI more context behind the prompt and influences the style of the response. It also improves factual accuracy because the model essentially puts itself in the shoes of an expert.

Assigning a role looks something like this:

"You're a digital marketing expert specializing in local SEO that has over a decade of industry experience. What advice would you give a small business that still doesn't have an online presence, taking into account their limited budget?"

Such a prompt will yield a better answer than a generic instruction to provide local SEO tips for small businesses.

If you tell AI to demonstrate expertise and give it enough details, it will be more careful about accuracy.

5. Tell AI what you don't want

Seeing as AI hallucinations occur largely due to unrestricted creativity paired with faulty training data, an effective way to reduce them is to preemptively guide the response through so-called "negative prompting."

While this method is typically used in image-generation tools, it can be highly useful for text generation.

Besides telling your AI tool what you expect to see in the response, you can add various limitations to narrow its focus, such as:

- "Don't include data older than five years."

- "Don't provide any financial or health advice."

- "Discard any information found on [specific URL]."

By adding negative prompts to your instructions, you can tailor the output to your needs while plugging the holes in AI's logic that may cause hallucinations.

This requires you to think a few steps ahead and predict where the model might go off course, which will become easier with time as you learn to communicate with it.

6. Fact check YMYL topics

As mentioned, AI hallucination can do significant damage when you're covering YMYL topics, which mainly boil down to financial and medical advice.

In many cases, all it takes is one wrong word for your content to provide inaccurate information that might negatively impact your audience.

A good example of this is Microsoft's BioGPT, an AI model designed specifically to answer medical questions.

Not only did the chatbot claim childhood vaccination could cause autism, but it also made up a source stating that the average American hospital is haunted by 1.4 ghosts.

This is why you must practice extra caution when using AI for YMYL topics. Besides the obvious ethical concerns of spreading misinformation, you should beware of damaging your SEO standing because Google doesn't take kindly to inaccuracies in YMYL content.

This doesn't mean AI is useless if your website predominantly publishes such content.

You can still leverage it to create first drafts more quickly.

Just make sure to double-check any specific claims your AI tool makes.

7. Adjust the temperature

Temperature setting is a useful feature of AI tools most users don't know about. It lets you directly impact the randomness of the model's response, helping you reduce the risk of hallucinations.

The temperature can range between 0.1 and 1.0, with the higher number indicating more creativity.

With this in mind, a temperature of 0.4–0.7 is suitable for general content that blends accuracy with creativity.

Anything below this should make your content more deterministic and focused on correctness.

This might seem a bit technical for an average user, but the good news is that you can adjust the temperature without any complex processes—all you need to do is tell your AI tool which temperature it should use.

We did a quick experiment with ChatGPT, telling it to provide a title idea for a blog post about dog food using a temperature of 0.1.

The response was:

"The Science of Sustenance: Exploring Nutrient-Rich Dog Food Formulas"

When instructed to repeat the task after adjusting the temperature to 0.9, the chatbot responded with:

"Drool-Worthy Doggie Dinners: A Gourmet Journey to Canine Cuisine"

As you can see, adding the temperature setting to your prompt strongly influences AI's creativity, so it's an excellent way to ensure there's not too much of it and minimize hallucinations.

8. Fact-check AI content

Regardless of how useful AI is, you shouldn't copy and paste the content it produces. Make sure to verify everything before publishing to avoid false claims caused by hallucinations.

While there's ongoing research aimed at eliminating this issue, we can't know for sure when to expect any significant progress.

Even experts disagree on whether AI hallucinations are a fixable problem.

While Bill Gates took an optimistic stance in his July blog post detailing AI's societal risks, Emili Bender from the University of Washington's Computational Linguistics Laboratory believes AI hallucination is "inherent in the mismatch between the technology and the proposed use cases."

Even when AI evolves and becomes more accurate, there will always be a need for a human touch.

Keep fine-tuning your prompts to give your AI tool as much direction as possible, and then fact-check the output to stay on the safe side.

Examples of AI hallucinations

AI hallucinations range from mildly entertaining to full-on dangerous. There have been several notable cases of AI chatbots spreading false information about historical events, public figures, and well-known facts.

One such case happened in April 2023, when ChatGPT claimed that an Australian mayor Brian Hood served a prison sentence in relation to bribery. While Hood was indeed involved in the bribery scandal ChatGPT was referring to as a whistleblower, he was never in prison.

Hood threatened to sue OpenAI for defamation, but the outcome is still unknown. OpenAI hasn't made a statement regarding the incident, so we're yet to see whether the company will face the first-ever defamation lawsuit against AI.

Another infamous mistake was made by Google Bard, which claimed that the James Webb Space Telescope was used to take the first picture of an exoplanet.

The error happened during a public demonstration of the model, and NASA disputed this claim shortly after. As a result, Google's market value plunged by over $100 billion.

AI hallucinations were also recognized before the recent generative AI boom.

Back in 2016, Microsoft released a Twitter AI bot named Tay.

Only a day after its launch, Tay started generating racist tweets using the language it picked up from other users. Microsoft couldn't remediate the issue and was forced to shut Tay down.

The above examples show that AI has a long way to go before it can be considered fully reliable. Until then, it's best to avoid taking everything it says at face value.

Key takeaways

- Giving AI too much freedom can cause hallucinations and lead to the model generating false statements and inaccurate content. This mainly happens due to poor training data, though other factors like vague prompts and language-related issues can also contribute to the problem.

- AI hallucinations can have various negative consequences. They might cause you to misinform your audience, damage your brand's reputation, and hurt your SEO rank. This is particularly true in the case of YMYL topics, for which accuracy is crucial.

- To minimize the risk of AI hallucinations, give your chatbot enough context and limit the room for error through clear, direct prompts. Focus on limited-choice questions over open-ended ones, and include data sources where possible.

- Another way to avoid hallucinations is to assign a role to AI. Doing so puts it in the shoes of an expert, which reduces the chance of inaccuracies. It's also a good idea to tell the chatbot what you don't want to see in the response to give it further direction.

- You can control AI's randomness directly by adjusting the temperature. Lower temperatures make the response more deterministic, so include them in your prompts.

- Even if you follow the above steps, make sure to fact-check the content created by AI before publishing it. Focus on any specific data that it might've gotten wrong to ensure your content is error-free.

Conclusion

While AI has evolved massively over the last couple of years, we're still in the early stages of its growth, so it's no surprise that there are still some issues to iron out.

Until that happens, using AI under human supervision is a smart move. Leverage it to shorten the content creation process and boost your productivity, but double-check the result to ensure your content is accurate and trustworthy.